Multisensory Processing

How do we integrate information from vision and touch for object processing? Can we improve object recognition of robots using these results?

A large series of experiments combining computer graphics with 3D printing technology show that humans are not only visual but also haptic experts. We can process very complex shape information by touch alone!

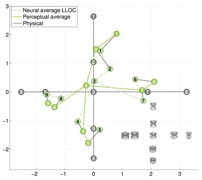

Nina Gaißert has shown that both similarity and categorization judgments are highly similar across the visual and haptic modalities. Using Multidimensional Scaling (MDS), we were able to reconstruct the perceptual spaces of visual and haptic exploration. These perceptual spaces almost perfectly matched with the highly complex physical input spaces both in vision and touch. In addition, we have shown that categorization and similarity ratings are intimately connected, and that the categorization task constrains the reconstructed clusters in perceptual space (Gaissert et al., 2012).

Lisa Whittingstall (née Dopjans) demonstrated in her work that haptic recognition of faces - one of the most complex object classes the brain deals with - is not only possible, but that information can be transferred between vision and touch for this task. In addition, experiments with a gaze-restricted aperture display show that we can even train humans to become “experts” at this unusual face recognition task based on serial encoding (Wallraven et al., 2013).

This combination of visual and haptic information also will enable better object recognition in robotic applications. For this, Björn Browatzki is implementing perceptually-motivated object recognition skills on two robotics platforms: the Care-O-Bot platform of the Fraunhofer Institute for Manufacturing Engineering and Automation (Stuttgart, Germany), and the iCub platform of the Italian Institute of Technology (Genoa, Italy). We recently demonstrated a highly effective active exploration framework that is able to derive optimal view selection for object recognition (Browatzki et al., 2014) on the iCub platform.

A perceptual study from Haemy Lee recently was able to demonstrate that active exploration of objects, indeed, leads to a better representation of shape than passive exploration. In order to show this, we used a tablet on which people were able to rotate and explore novel 3D shapes. We then asked participants to rate the similarity between two subsequently presented shapes in a number of different experimental conditions. We found that active exploration was consistently better at representing the physical parameter space, compared to all other conditions.

In a neuroimaging study, Haemy Lee Masson was able to show that LOC (lateral occipital complex) - an area that has been implicated in visual and haptic processing of shape - is not only able to integrate information from vision touch, but that it carries a precise and faithful representation of an external shape parameter space. Using computer graphics, we created a complex shape space and printed the resulting objects in 3D. A visual and a haptic participant group then explored these objects using similarity ratings which allowed us to reconstruct their visual and haptic perceptual space. We then scanned the brains of these participants using fMRI in order to find out which brain areas carried a signature of the previously defined perceptual space. We found that area LOC was implicated in both the visual and the haptic group although the input modality and exploration procedure varied considerably between the two groups. Our results suggest that this area carries a detailed, potentially supramodal, representation of shape.

Information transfer from vision to touch (and vice versa) exhibits a large inter-individual variability. Haemy Lee Masson investigated whether it would be possible to find anatomical white-matter correlates that would predict such cross-modal transfer performance in one of our typical shape-categorization tasks. In a collaboration with the Université de Bordeaux (Laurent Petit), we found that fast visual learners exhibited higher fractional anisotropy (FA) in two white-matter tracts (left SLF_ft and left VOF). Importantly, the cross-modal haptic performance correlated with FA in ILF and with axial diffusivity (AD) in SLF_ft. These findings provide clear evidence that individual variation in visuo-haptic performance can be linked to micro-structural characteristics of WM pathways.

-

• B. Browatzki, V. Tikhanoff, G. Metta, H. Bülthoff, and C. Wallraven. Active In-Hand Object Recognition on a Humanoid Robot. IEEE Transactions on Robotics, 30: 1260-1269, 2014.

-

• C. Wallraven, L. Whittingstall, and H. H. Bülthoff. Learning to recognize face shapes through serial exploration. Experimental Brain Research, 226(4): 513-523, 2013.

-

• N. Gaissert, H. H. Bülthoff, and C. Wallraven. Similarity and categorization: From vision to touch. Acta Psychologica, 138:219-230, 07 2011.

-

• H. Lee and C. Wallraven. Exploiting object constancy: effects of active exploration and shape morphing on similarity judgments of novel objects. Experimental Brain Research, 225:277-289, 2013.

-

• H. Lee Masson, J. Bulthé, H. Op De Beeck, and C. Wallraven. Visual and Haptic Shape Processing in the Human Brain: Unisensory Processing, Multisensory Convergence, and Top-Down Influences. Cerebral Cortex 2015; doi: 10.1093/cercor/bhv170

-

• H. Lee Masson, C. Wallraven*, and L. Petit. Can touch this: cross-modal shape categorization performance is associated with micro-structural characteristics of white matter association pathways. Human Brain Mapping, 2017.

-

page created by C. Wallraven, last change March 5th, 2019