Social Face Processing

How are faces recognized? Which features are important? Does culture play a role?

We have recorded a large, 3D database of Korean faces. This has been used to construct a morphable model with which we can precisely manipulate faces (Shin et al., 2012). Since the database is compatible to the MPI Face Database (Troje et al., 1996; faces.kyb.tuebingen.mpg.de), we can easily create cross-cultural morphs. Studies about cross-cultural perception of faces (such as the other-race effect) are currently underway (Lee et al., 2011).

-

•C. Dahl, N. Logothetis, H. Bülthoff, and C. Wallraven. The thatcher illusion in humans and monkeys. Proceedings of the Royal Society of London B, 277(1696):2973-2981, 10 2010.

-

•C. Dahl, C. Wallraven, H. Bülthoff, and N. Logothetis. Humans and macaques employ similar face-processing strategies. Current Biology, 19(6):509-513, 03 2009.

-

•A. Schwaninger, J. Lobmaier, C. Wallraven, and S. Collishaw. Two routes to face perception: Evidence from psychophysics and computational modeling. Cognitive Science, 33(8):1413-1440, 09 2009.

-

•A. Shin, S. Lee, H. Bülthoff, and C. Wallraven. A morphable 3d-model of korean faces. In Proceedings of SMC 2012, 10 2012.

-

•R. Lee, I. Bülthoff, R. Armann, C. Wallraven, and H. Bülthoff. Investigating the other-race effect in different face recognition tasks. Asian Pacific Conference on Vision (APCV 2011), 2011:1, 07 2011.

-

•C Wallraven. Touching on face space: Comparing visual and haptic processing of face shapes. Psychonomic bulletin & review 21 (4), 995-1002, 2014

-

•C Wallraven, L Dopjans. Visual experience is necessary for efficient haptic face recognition. NeuroReport 24 (5), 254-258, 2013.

-

•M. Nusseck, D. W. Cunningham, C. Wallraven, and H. Bülthoff. The contribution of different facial regions to the recognition of conversational expressions. Journal of Vision, 8(8):1:1-23, 06 2008.

-

•D. W. Cunningham and C. Wallraven. Temporal information for the recognition of conversational expressions. Journal of Vision, 9(13):1-17, 12 2009.

-

•K. Kaulard, D.W. Cunningham, H.H. Bülthoff, and C. Wallraven. The MPI facial expression database - a validated database of emotional and conversational facial expressions. PLoS One, 7(3):e32321, 01 2012.

-

•A. Aubrey, D. Marshall, P .Rosin, J. Vandeventer, D. Cunningham, and C. Wallraven. Cardiff conversation database (CCDB): A database of natural dyadic conversations. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), V&L Net Workshop, pages 0-0, 06 2013.

-

•J. Kang, B. Ham, and C Wallraven*. Cannot avert the eyes: Reduced attentional blink toward others’ emotional expressions in empathic people. Psychonomic Bulletin and Review, in press, 2016

Do monkeys and humans process faces similarly?

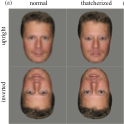

In addition, we are interested in comparing face perception across species. Two of our recent studies have shown that monkeys and humans look at faces in a very similar way and that they are even “fooled” by the same perceptual illusion (Dahl et al., 2009; 2010)!

Shown on the left are one human face and one monkey face. If you look at the human face on the top right, you will immediately notice that it looks grotesque as its eyes and mouth have been inverted inside the face. Turning this face upside-down, however, the grotesqueness disappears. This is the well-known Thatcher illusion. If you look at the monkey face, chances are that all of the monkey faces will look equally “normal” to you. This is because humans are not experts for monkey faces. So if you present these faces to monkeys, their gaze will be immediately drawn to the grotesque monkey face, as something is not right about them. Similarly, they will not be interested in the manipulated human face more than in its “normal” version. We showed for the first time that monkeys and humans, indeed, fall prey to the exact same illusion.

To what degree can a face space be represented by touch?

The concept of the “face space” is highly influential in psychology. It posits that faces are represented in a vector space in which, for example, the average face is the coordinate origin and distinct, atypical faces would be in the periphery. This concept is able to explain many facets of face perception.

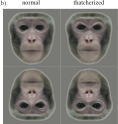

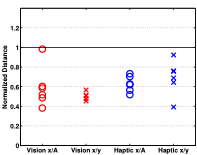

Here, we went one step further: since our other studies have shown that shape knowledge is very well shared across modalities (vision and touch, see here), we wanted to know whether touch could also re-create a face space as well as vision (Wallraven, 2014). Using computer graphics and a morphable model (see above), we created a structured face space and then tested participants using either vision or touch in a standard similarity rating task (see picture on the left for one participant exploring a face mask in the touch condition). We found that whereas there were some similarities between the visual and touch-based “face spaces”, there were still some critical differences in terms of the detailed topology of the space. This may indicate that faces may be actually special to vision (see also our study on blind participants trying to recognize faces from touch; Wallraven and Dopjans, 2013).

How do we process emotional and conversational facial expressions? Are Koreans and Germans similarly expressive? Can we teach the computer to recognize expressions?

As the space of facial expressions is very large, we need to create good expression databases. Currently, we are using a German database for both perceptual and computational experiments (Kaulard et al., 2012); recording of a Korean database is underway. Results show that humans are extremely good at interpreting facial expressions – computers still have a long way to go.

In the Cognitive Systems lab, we are currently recording a database of Korean conversational expressions. The database is modeled after the MPI database of Facial Expressions and will contain more than 50 expressions at 2 levels of intensity. With both databases, we will be conducting experiments both in Korea and in Germany to investigate within-cultural and cross-cultural processing of facial expressions at unprecedented detail.

In a collaboration with the University of Cardiff with funding from the Research Institute of Visual Computing, we are currently recording a database of conversations with the specific aim of investigating facial movements in the context of natural conversations. For this, we recorded >60 conversations between two people, each about 5 minutes long. Recordings were captured with standard video cameras, as well as two state-of-the-art 4D scanners. We are currently post-processing the data and have released parts of the database to the general public (Aubrey et al., 2013).

How automatic is processing of emotional facial expressions? Is this different for empathic and non-empathic people?

In our experiment, we show that empathic people need to process emotional faces, as these captures their attention.

To demonstrate this, we use a large sample of 100 participants who undergo an attention test. Importantly, we include a control condition, in which we show not only another person's emotional face in the attention task, but also participants' own emotional faces. Since empathy per se is directed towards other people, we hypothesized that the attentional effect

should not happen for own faces, which is exactly what we found in our results. We also were able to link the amount of attentional capture with daily prosocial behaviors as measured by post-experiment online surveys, showing a connection between empathy, attentional processes, and helping other people.

-

page created by C. Wallraven, last change March 5th, 2019